Alerts and Detections¶

DTonomy ingests security alerts from different platforms so that you can analyze, correlate and respond to them.

Ingestion¶

After onboarding, you can start ingesting your data to DTonomy. DTonomy has out-of-the-box integrations with a variety of tools. You can post your data to DTonomy end point from Syslog or other applications. For more integrations request, please feel free to reach back to us at info@dtonomy.com

On the high level, we support two types of data ingestion methods.

- DTonomy pulls data from the third party.

- The third party post data to DTonomy end point.

Pull¶

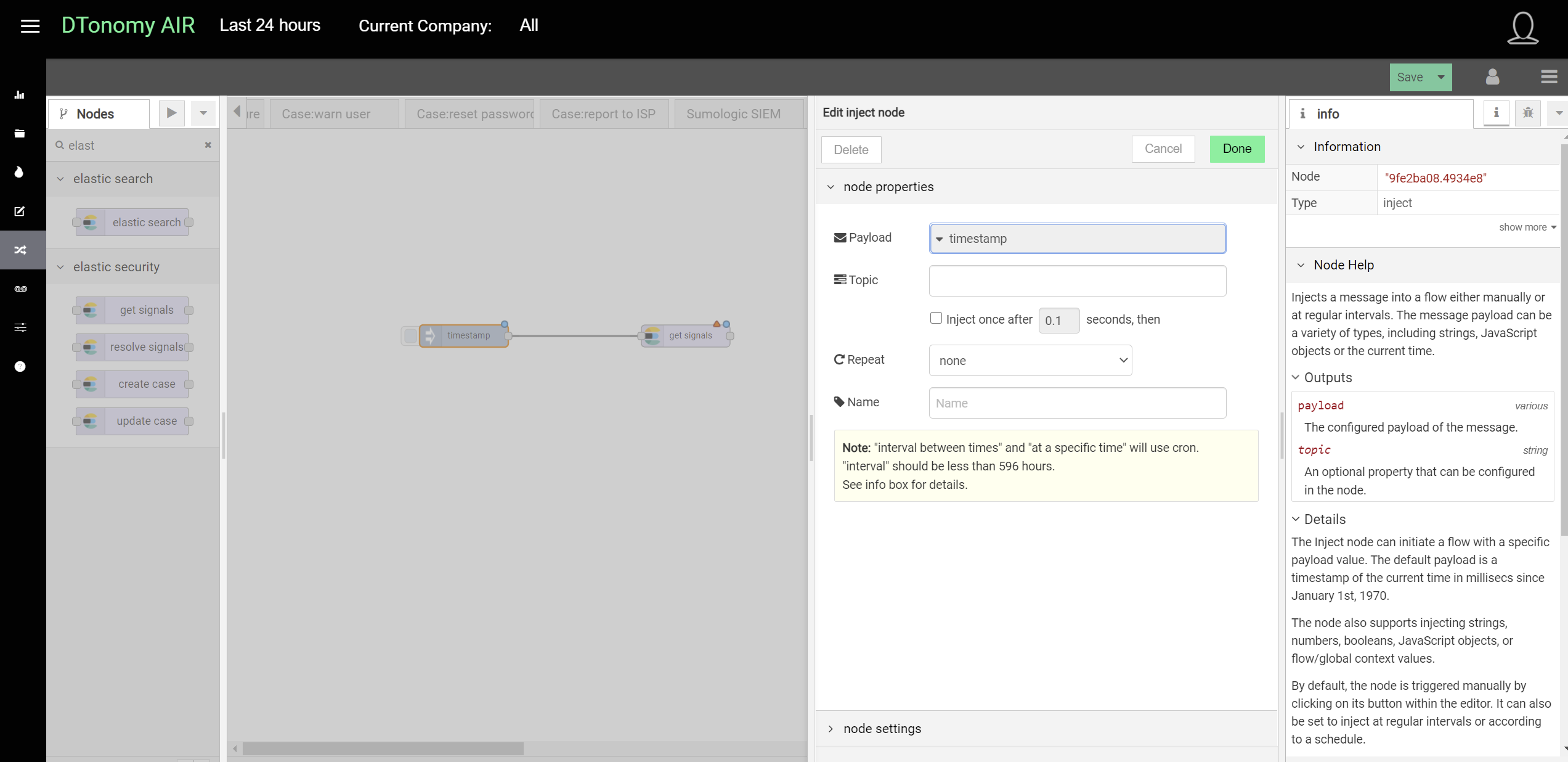

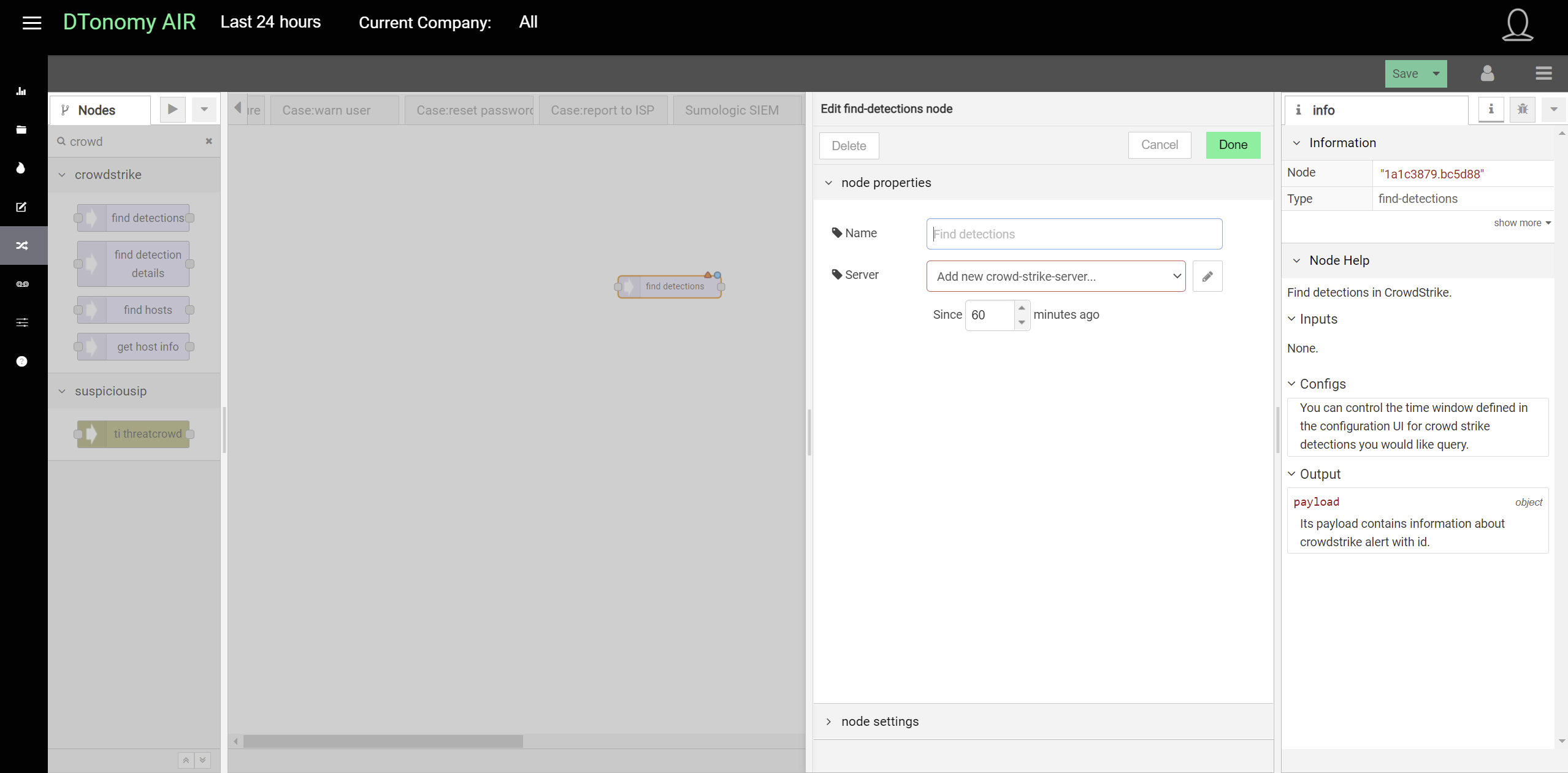

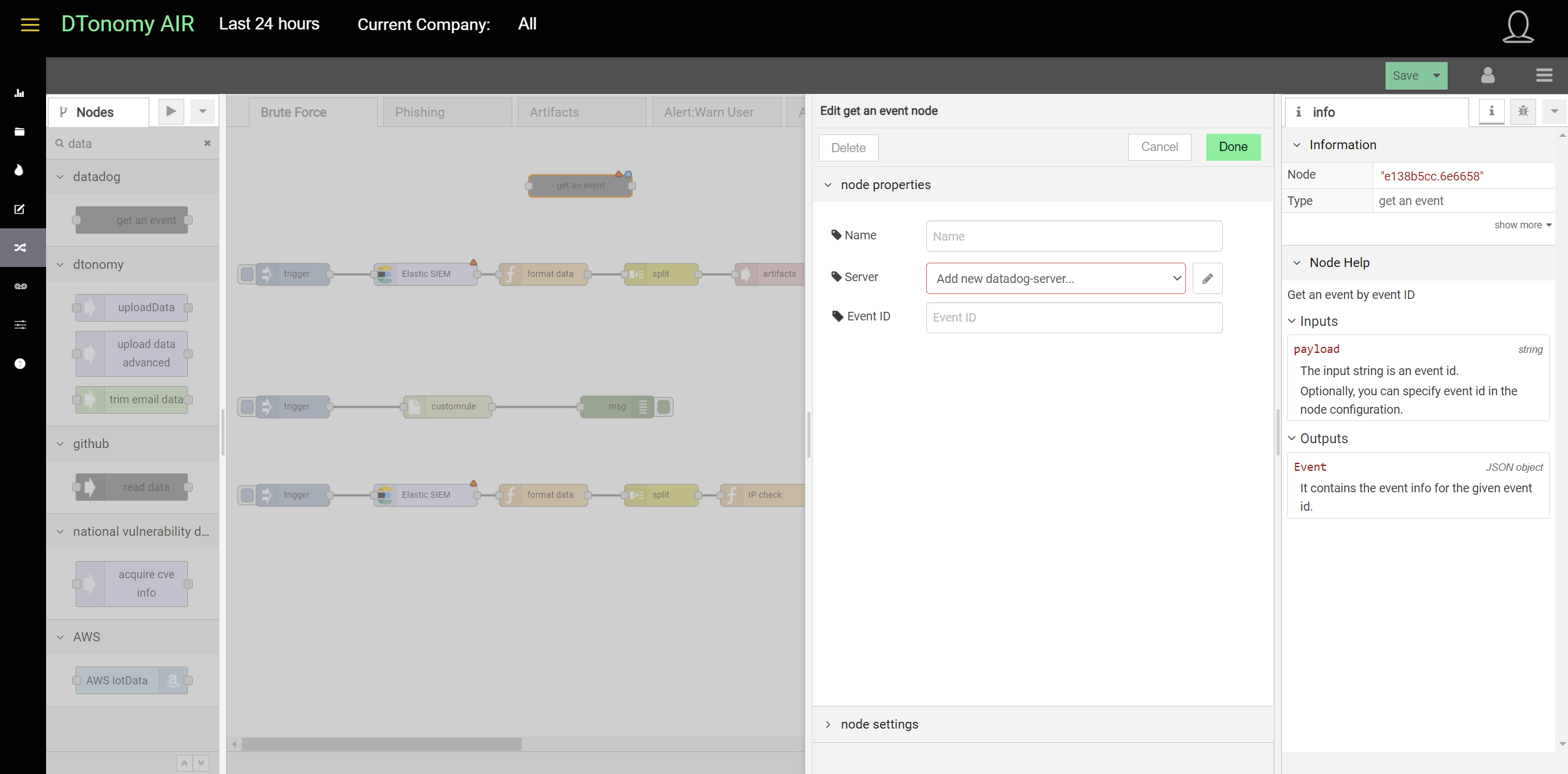

From DTonomy’s platform, you can create a workflow to continuously retrieve data from the third party using existing third-party connectors. This shows an example to retrieve data from Elastic Search.

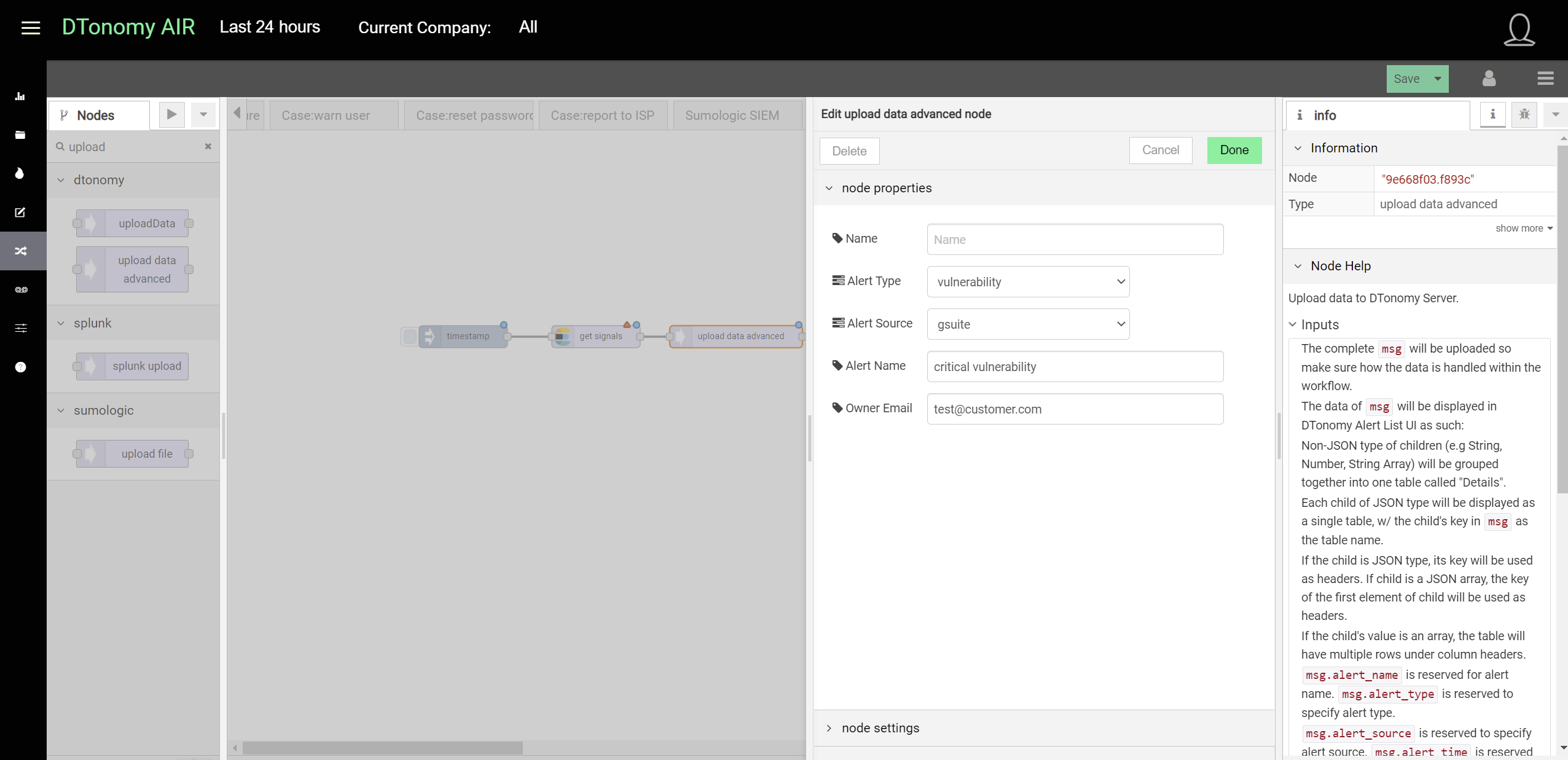

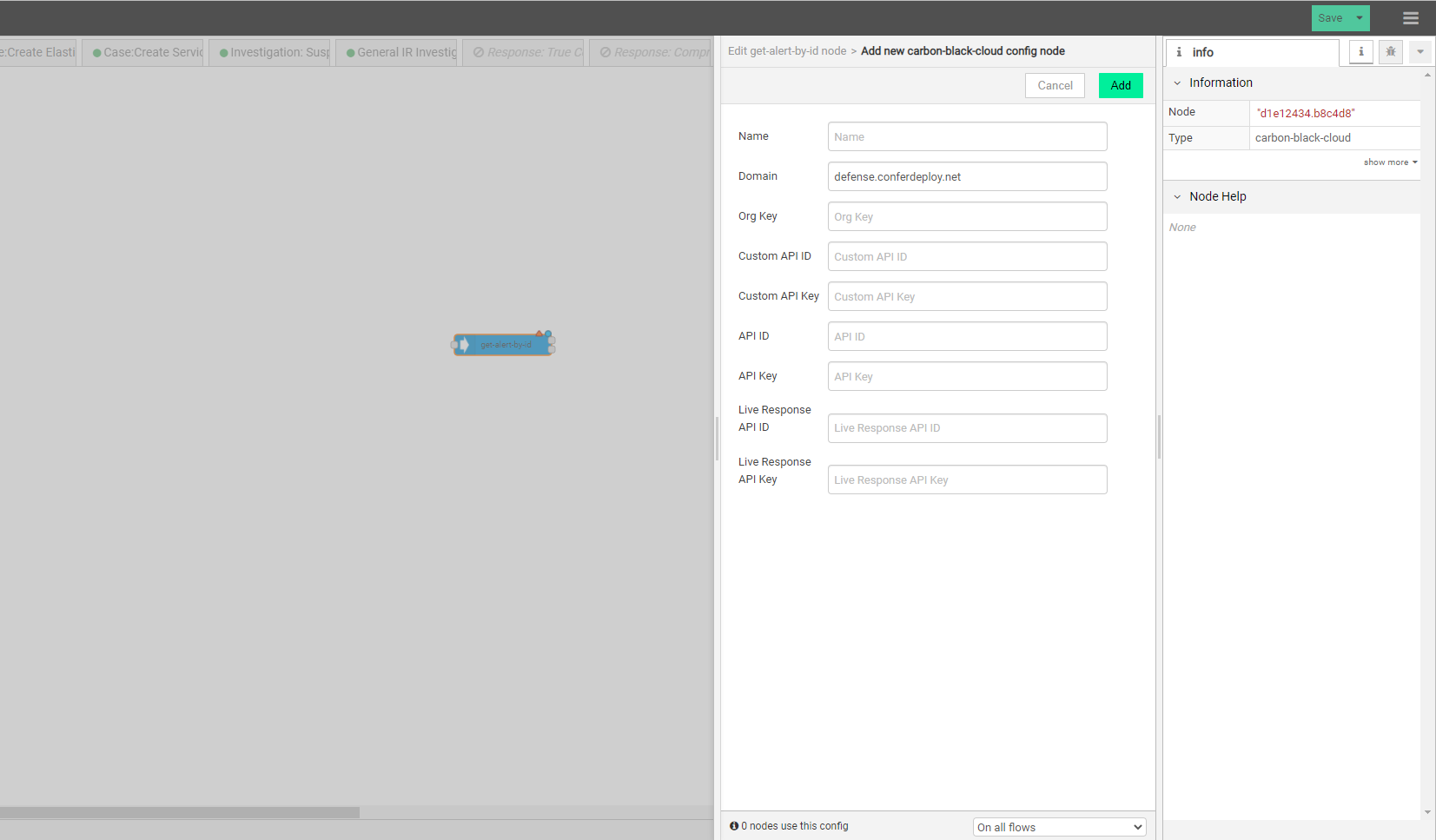

The first node is a trigger(inject) node indicating how you would like to retrieve data from the third party. The second node is a connector to the third party which wraps the API connections. After you enter the necessary authentication information, it connects to third-party API to retrieve data as you specified in the configuration.

Appending “Upload data advanced” node to the end, it will upload the data to DTonomy’s Server directly.

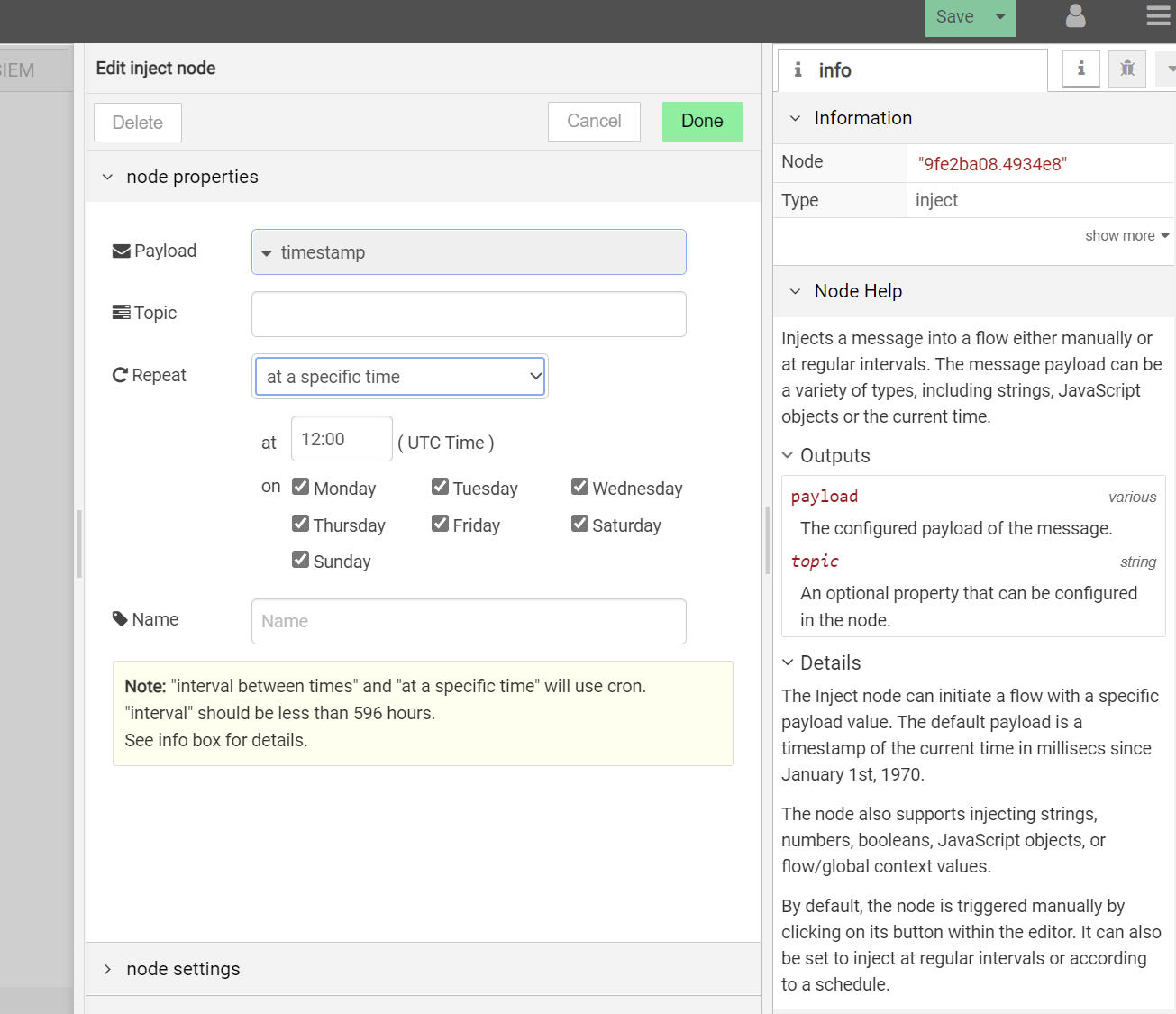

The trigger(inject) nodes have two different modes to support different types of actions:

- ad hoc ingestion

The default behavior for “inject” node is an ad hoc trigger where you click the little button before it and it triggers the ingestion action once. It is good for testing purposes.

- continuous ingestion

By changing the value of “Repeat” configuration under “inject” node, you can author it to run at a fixed time daily or at a specific interval.

Post¶

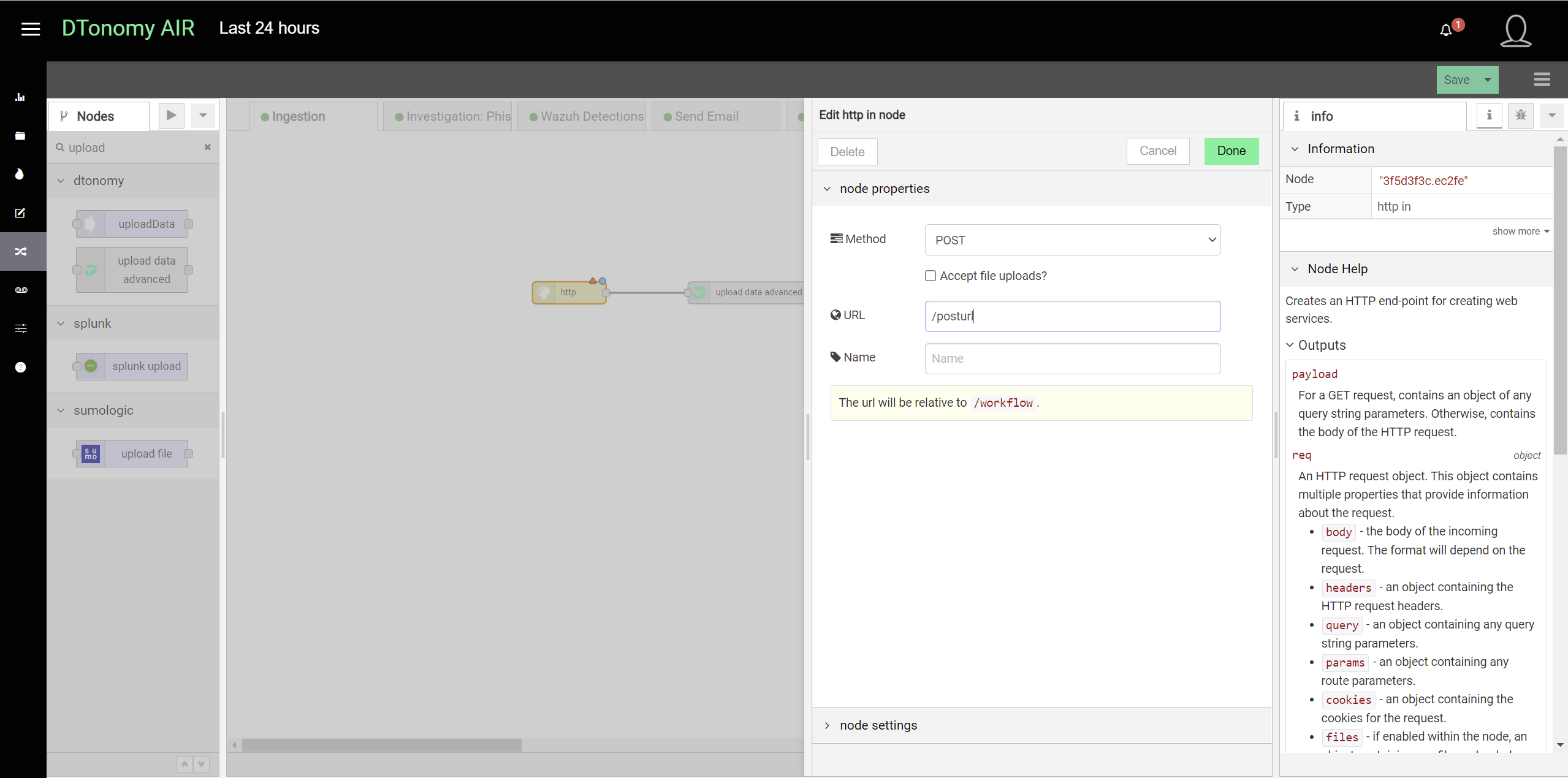

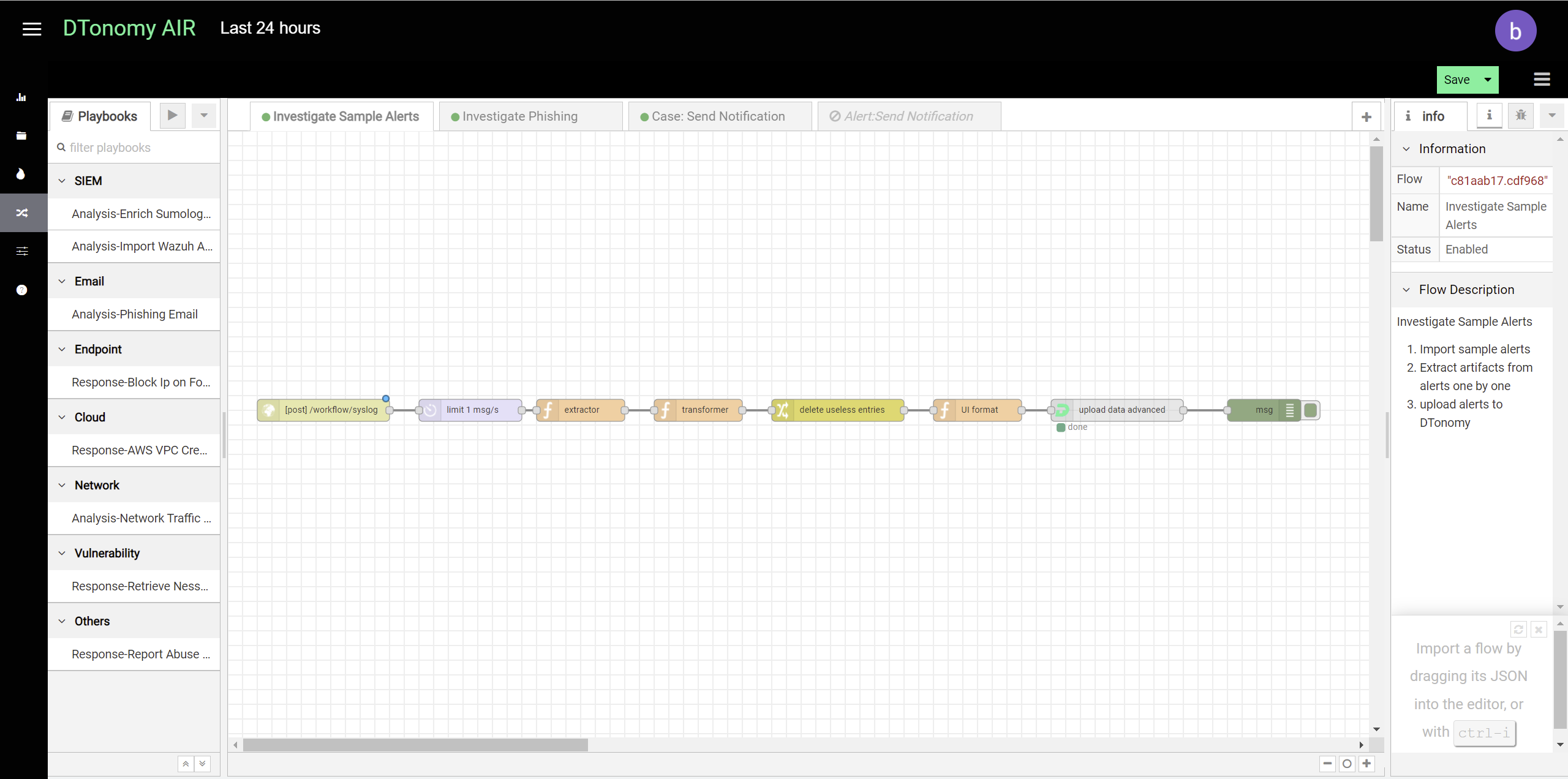

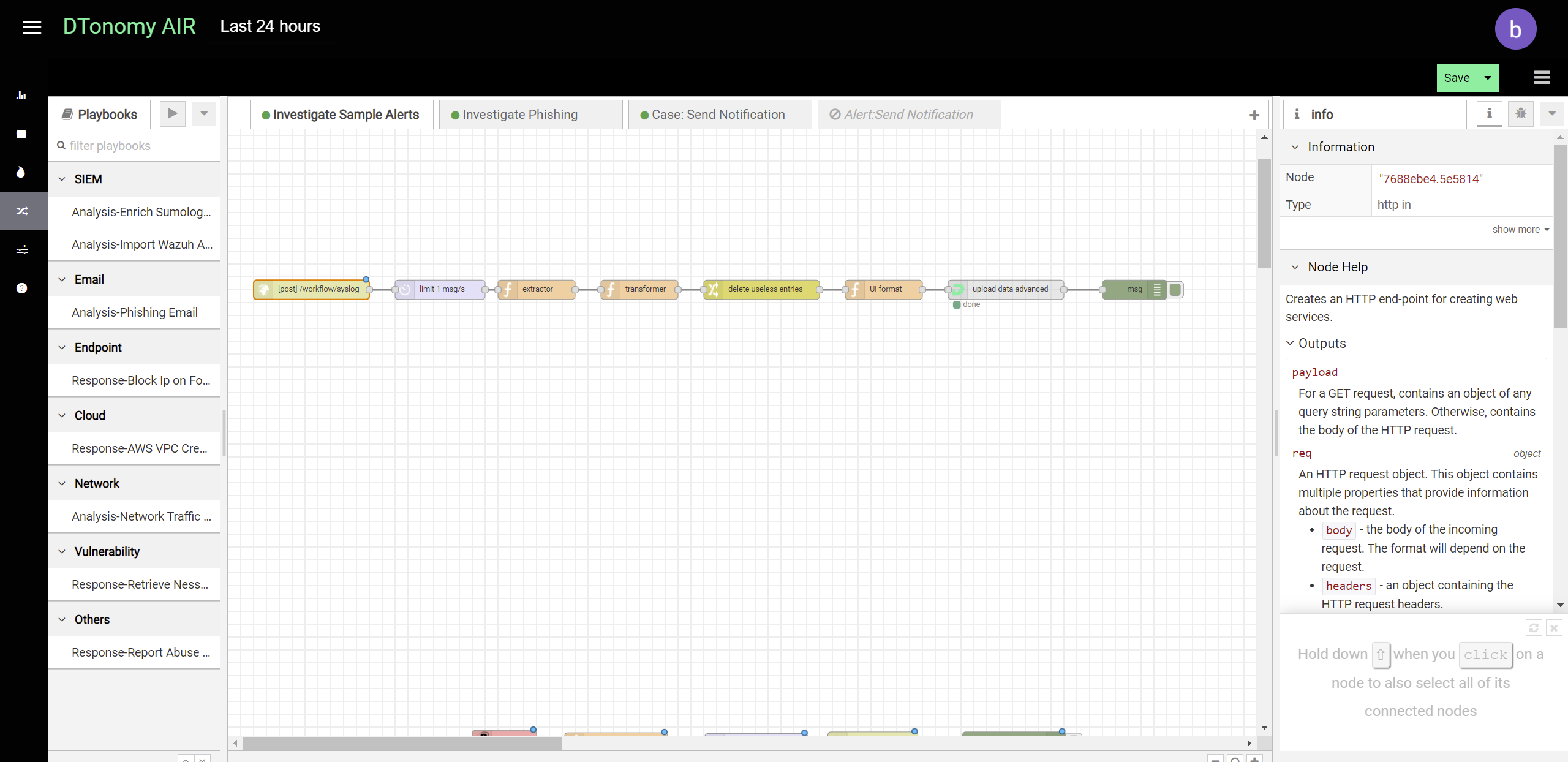

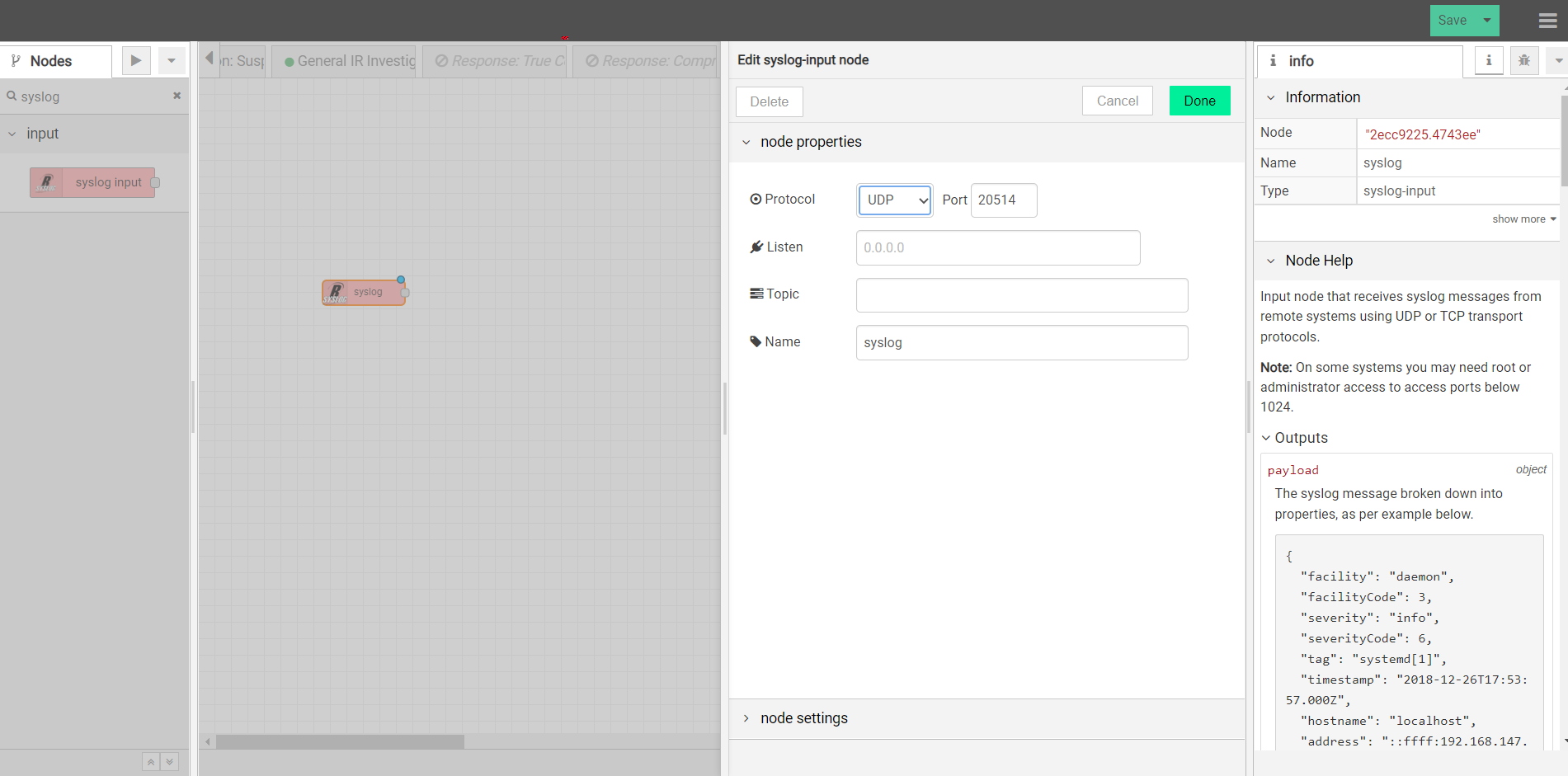

You can post security alerts or other data to DTonomy’s platform directly. To do this, you first set up a listener using http node.

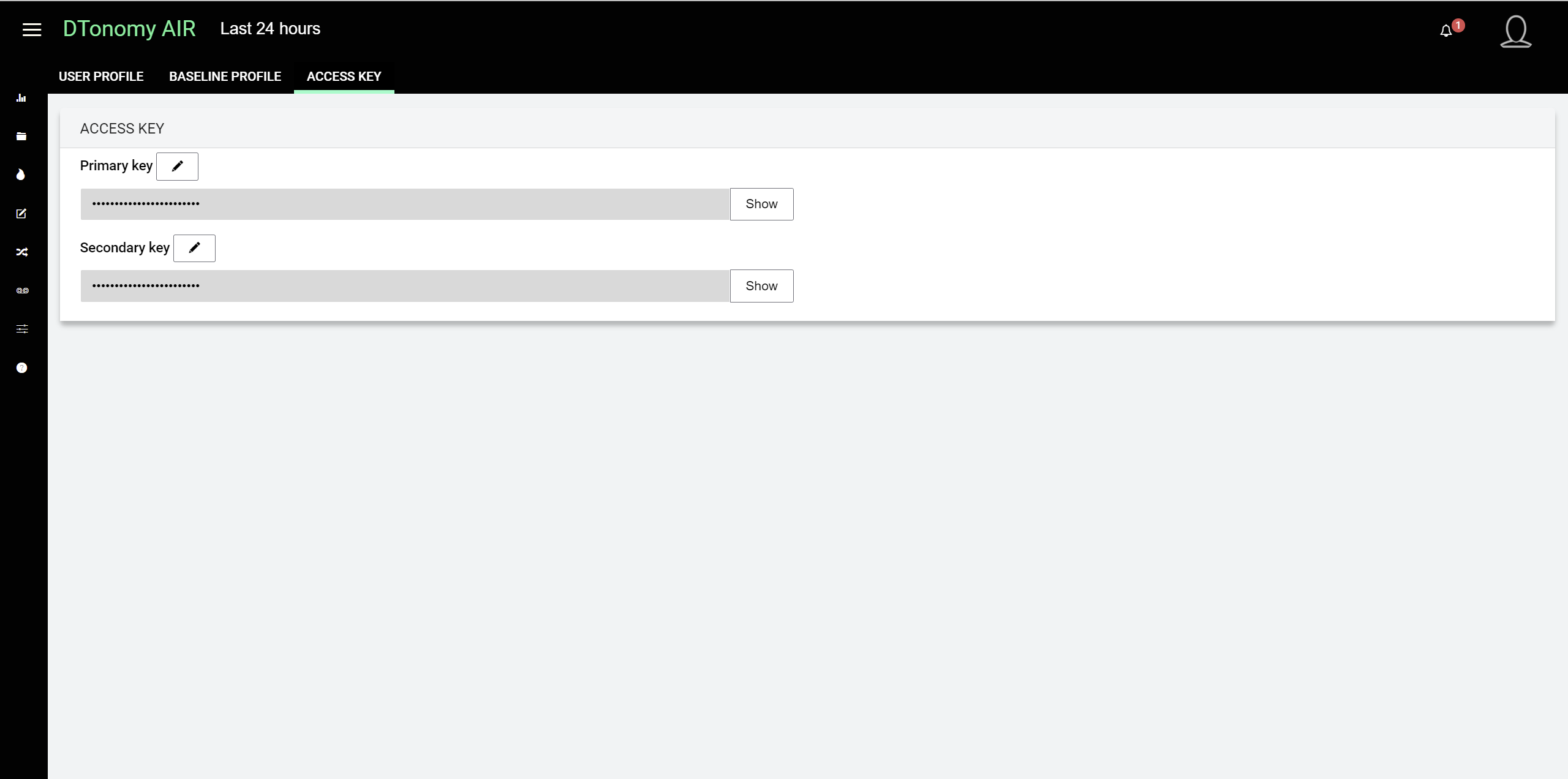

Add “Upload node advanced” after it to upload to DTonomy’s platform. In between, you can insert other nodes to perform parsing, normalization or entity extraction. To construct “post” request, you will need API key and tenant id. You can find API key on DTonomy’s web client.

Here is an example request.

curl --location --request POST 'https://abc.dtonomy.com/workflow/syslog?tenantId=8' \

--header 'Authorization: 5712875e6b13c284ac975018' \

--header 'Content-Type: application/json' \

Existing Connectors¶

Aruba ClearPass¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure ClearPass to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure ClearPass is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Aws¶

DTonomy has built in integrations with Aws. You can use them to retrieve logs from AWS CloudTrail or Security Compliance for IAM user.

Azure¶

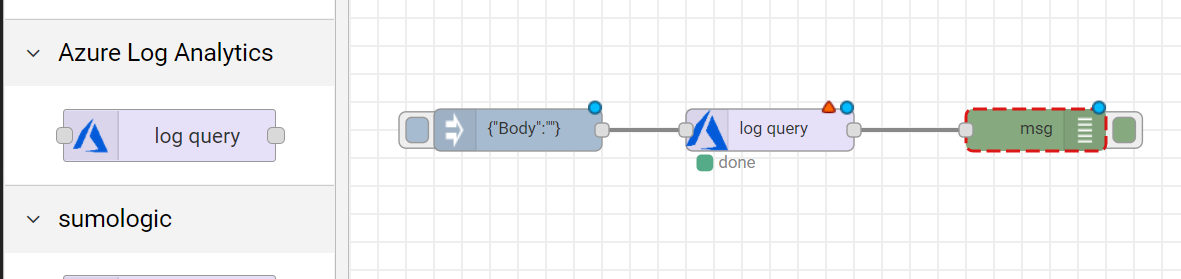

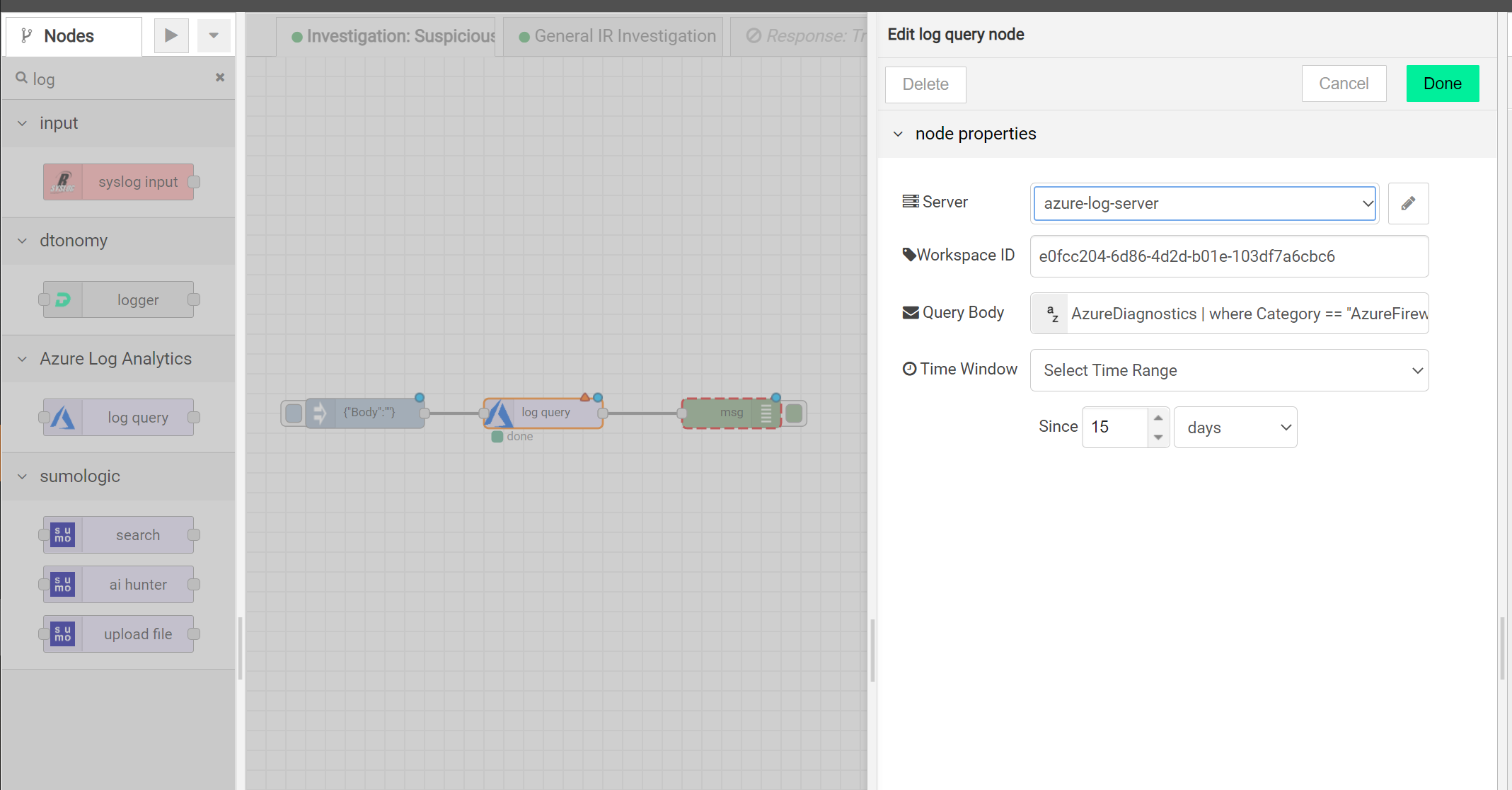

Retrieve logs from Azure for your specific query including firewall or other solution.

Here is the connector:

Here is an example:

Agent-based collector¶

You can install a light-weight agent on your end point to upload logs. Check out with DTonomy for more information.

Akamai Security Events¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure Akamai to forward Syslog messages to your workflow instance provided by DTonomy.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Alientvault OTX¶

Collect threat intelligence from Alientvault.

Barracuda CloudGen Firewall¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure CloudGen to forward Syslog messages to your workflow instance provided by DTonomy.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Barracuda WAF¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure WAF to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure WAF to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Broadcom Symantec Data Loss Prevention(DLP)¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure DLP to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure DLP to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

BlackBerry CylancePROTECT¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure CylancePROTECT to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure CylancePROTECT to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Cisco ASA Firewall¶

You can ingest Cisco ASA firewall alerts or logs to DTonomy via Syslog provided by DTonomy.

Firstly, you configure Cisco ASA firewalls to forward logs/alerts to DTonomy’s Syslog server within your network.

Secondly, once DTonomy’s syslog server receives the data, it will upload to DTonomy’s cloud sever.

Cisco Meraki¶

You can ingest firewall alerts from Cisco Meraki to DTonomy via Syslog provided by DTonomy.

Firstly, you configure Cisco Meraki to forward logs/alerts to DTonomy’s Syslog server within your network. More details on configuring Cisco Syslog forwarder is here.

Secondly, once DTonomy’s syslog server receives the data, it will upload to DTonomy’s cloud sever after parsing and normalizing.

Corelight Zeek¶

You can ingest Corelight Zeek alerts or logs to DTonomy via Syslog provided by DTonomy.

Firstly, you configure Corelight Zeek to forward logs/alerts to DTonomy’s Syslog server within your network.

Secondly, once DTonomy’s syslog server receives the data, it will upload to DTonomy’s cloud sever.

Checkpoint Firewall¶

You can ingest checkpoint FW1/VPN1 alerts or logs to DTonomy via Syslog provided by DTonomy.

Firstly, you configure checkpoint FW1/VPN1 to forward to local Syslog server within your network.

Secondly, once DTonomy’s syslog server receives the data, it will upload to DTonomy’s cloud sever.

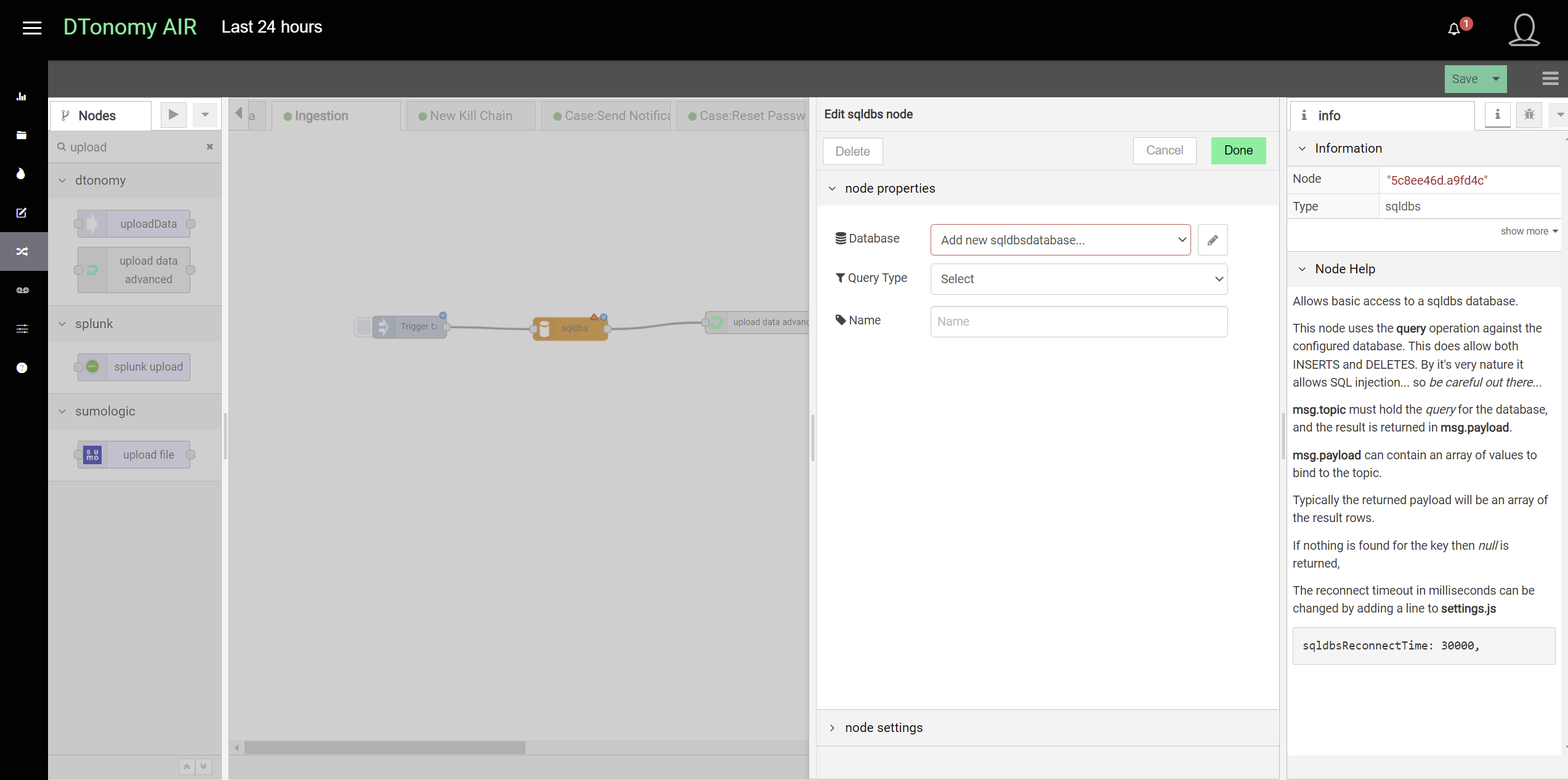

Database collector¶

You can retrieve data directly from a client relational database. The database could be MSSQL, MYSQL, SQLite, and PostgreSQL

and then connect to “upload advanced” node to upload to DTonomy’s platform. In between, you can insert other nodes to perform normalization or entity extraction.

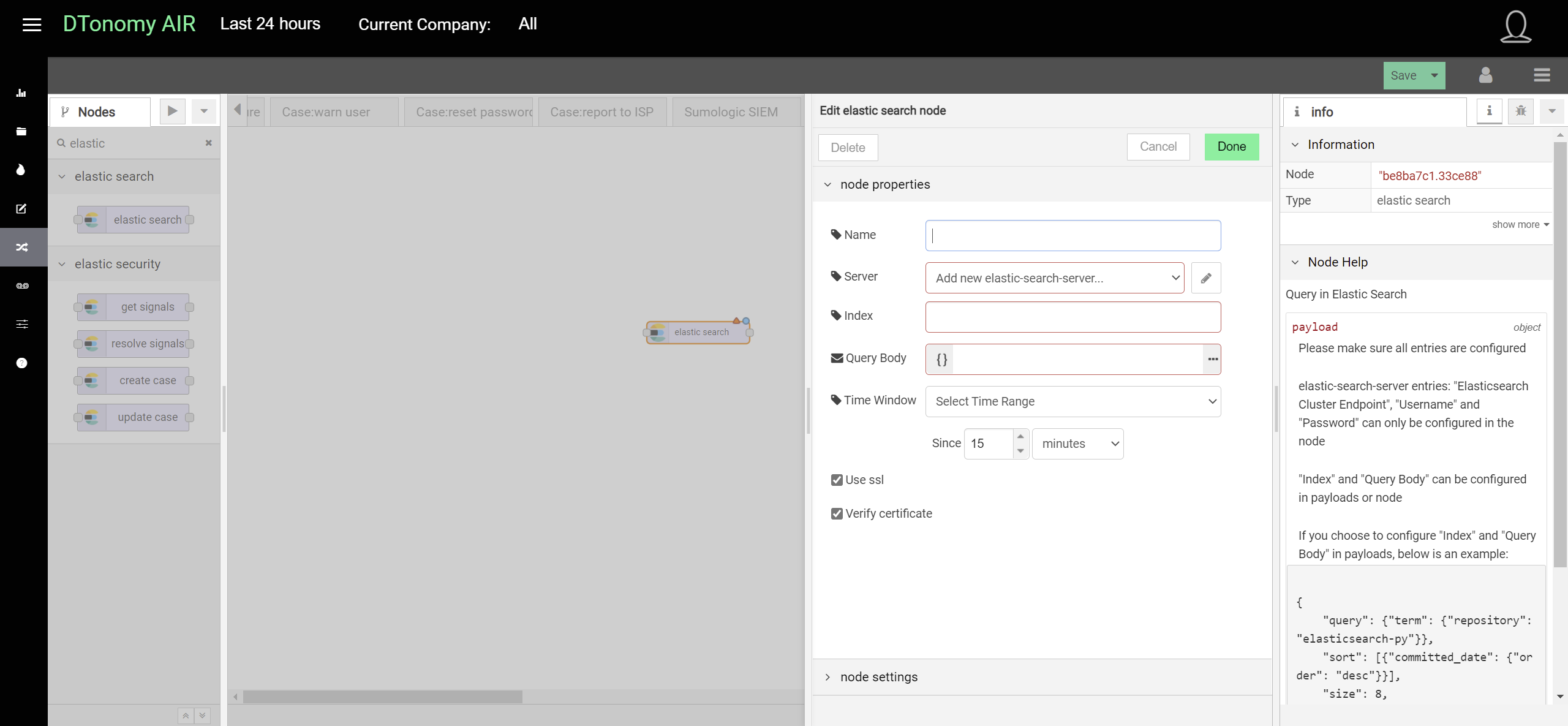

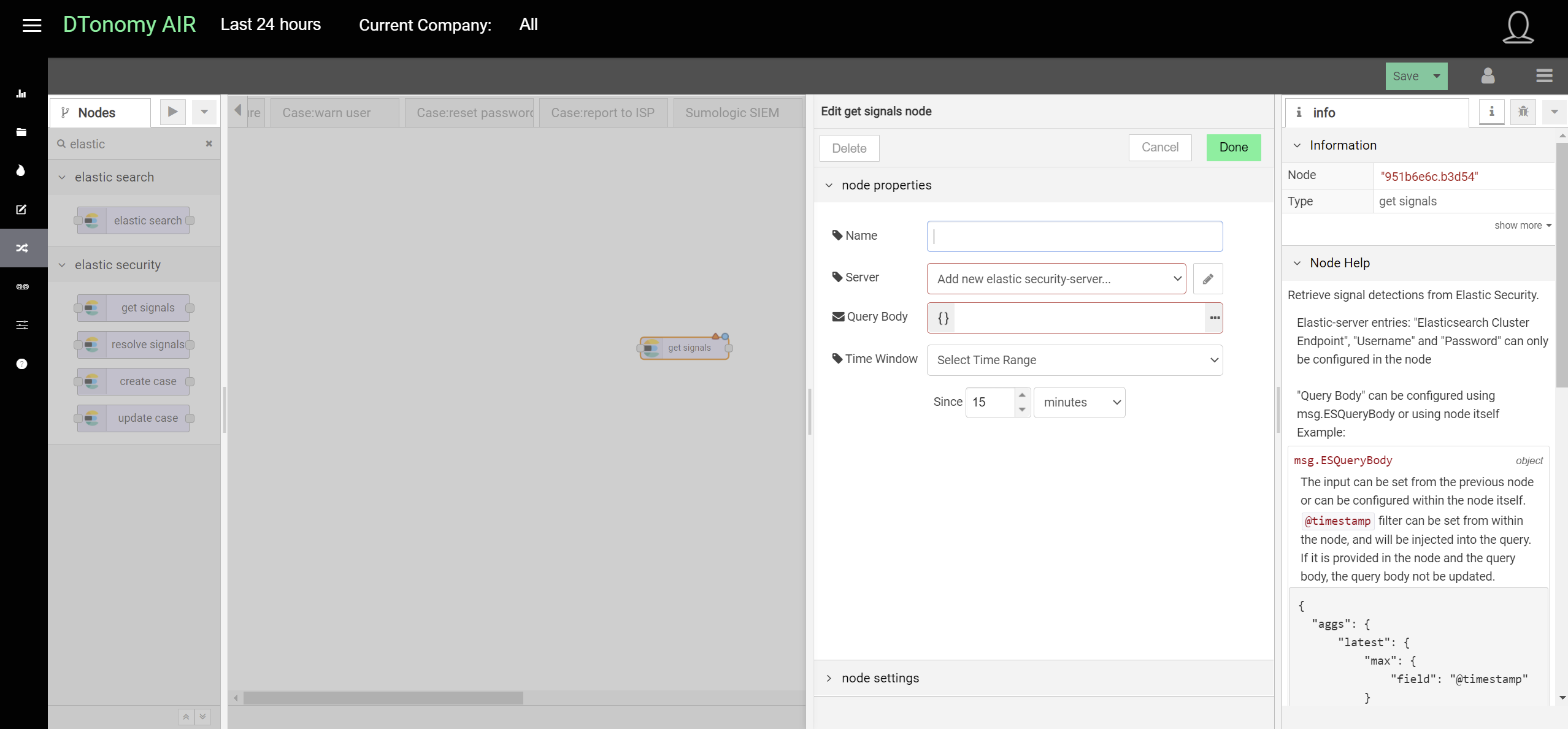

Elasticsearch¶

DTonomy has two different types of Elasticsearch integrations. One is for a generic Elasticsearch query.

One is for security detections only.

Exabeam¶

There are two ways you can integrate with Exabeam.

Method 1: you can ingest data via Syslog

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure Exabeam to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure Exabeam to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Method 2: you can pull data via API integrations

Extrahop¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure Extrahop to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure Extrahop to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Fidelis¶

You can configure Fidelis to send alerts to DTonomy end point directly with provisioned Auth Key.

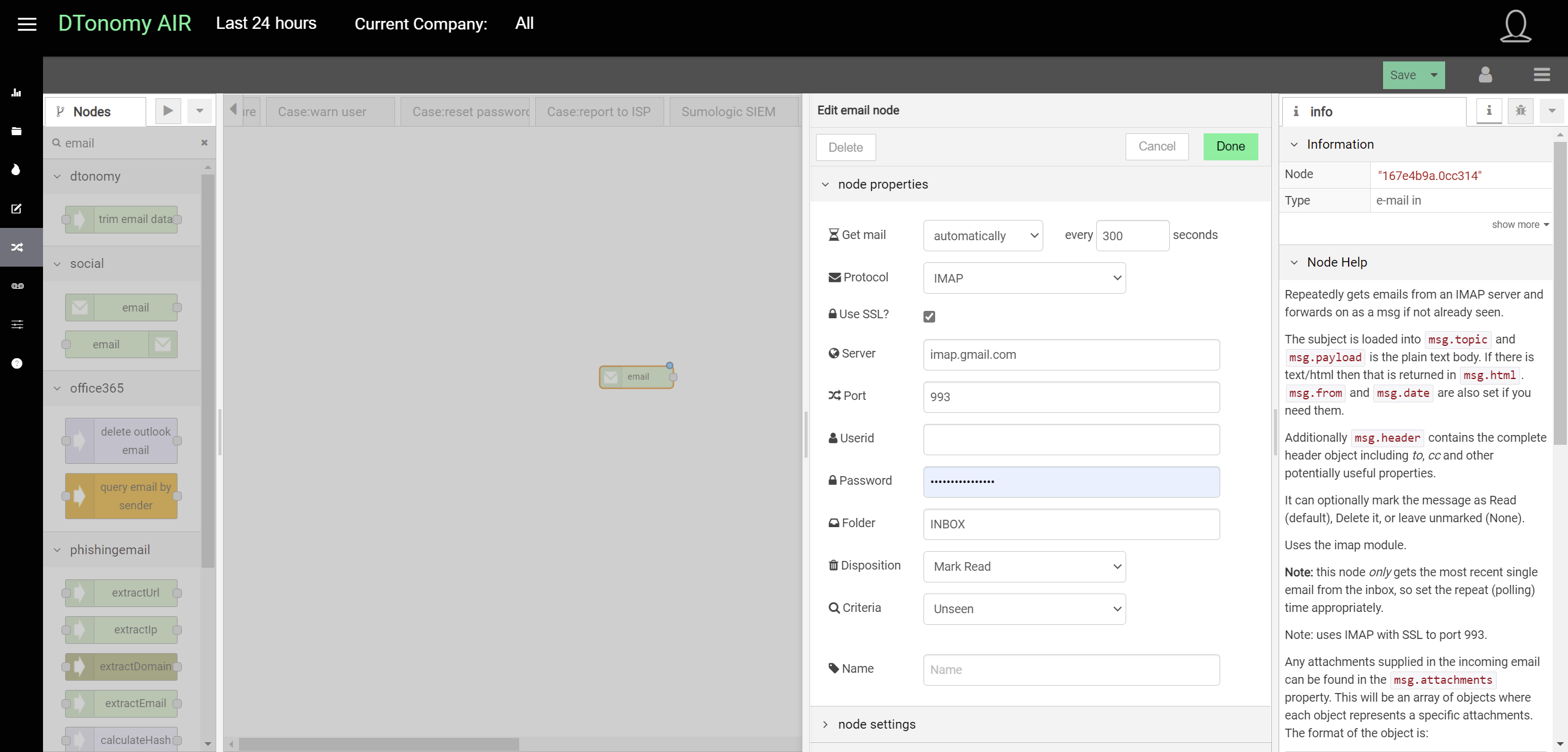

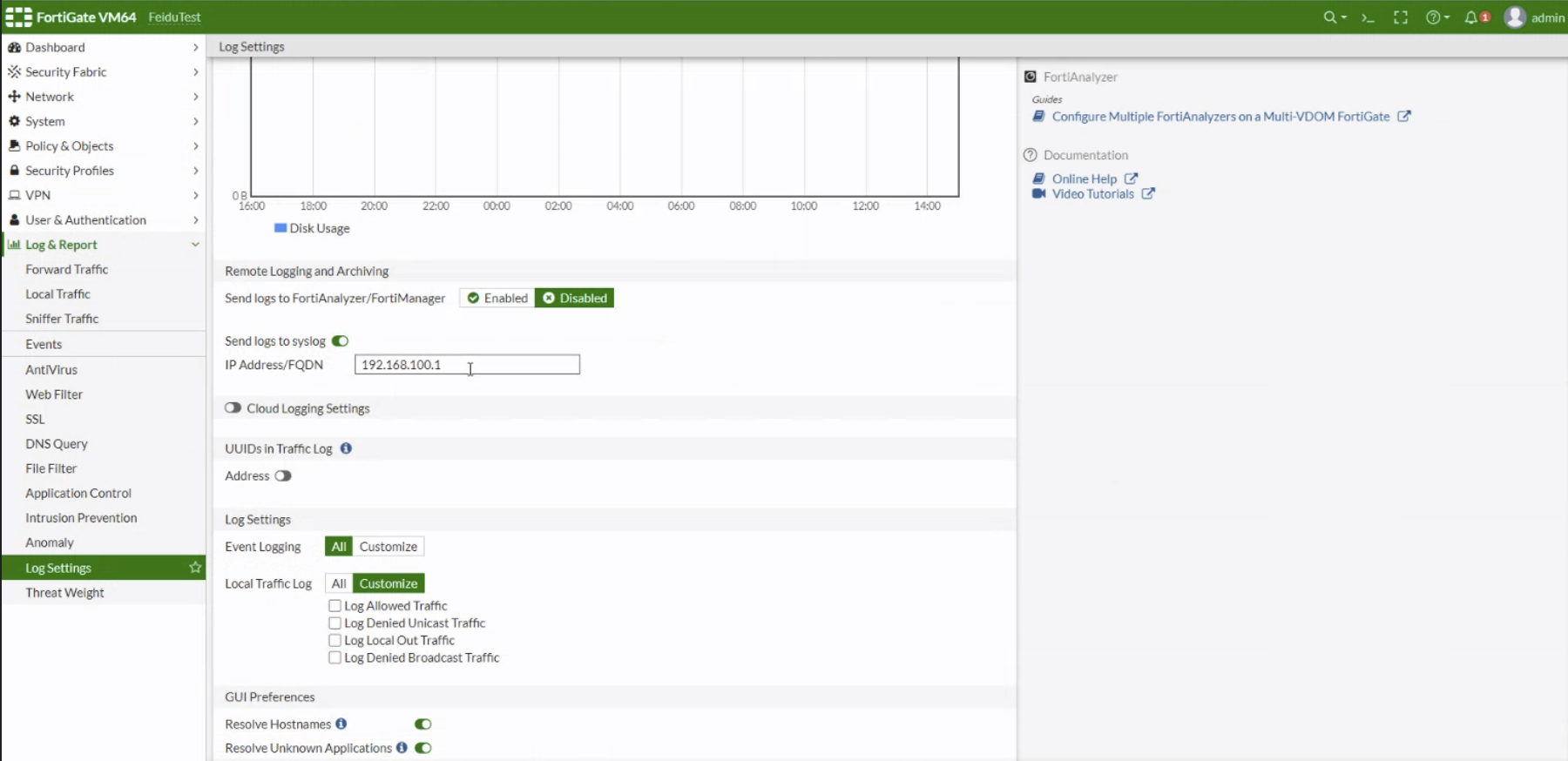

Fortinet FortiGate¶

To upload Fortigate logs/alerts to DTonomy, you can upload via a local Syslog connector provided by DTonomy.

Before uploading, configure Fortigate first to forward logs to Syslog.

F5 Networks (ASM)¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure ASM to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure ASM to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Github¶

Get log from GitHub repository with existing API. Enter a token if accessing a private repository.

HaveIbeenpwned¶

Collect results on leaks related domain with HaveIBeenPwned

Infoblox Network Identity Operating System (NIOS)¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure NIOS to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure NIOS to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Jira¶

Retrieve ticket from Jira for investigation with DTonomy’s built-in API.

Juniper SRX¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure Juniper to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure Juniper to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Kace¶

Get machine inventory from Kace using existing connector.

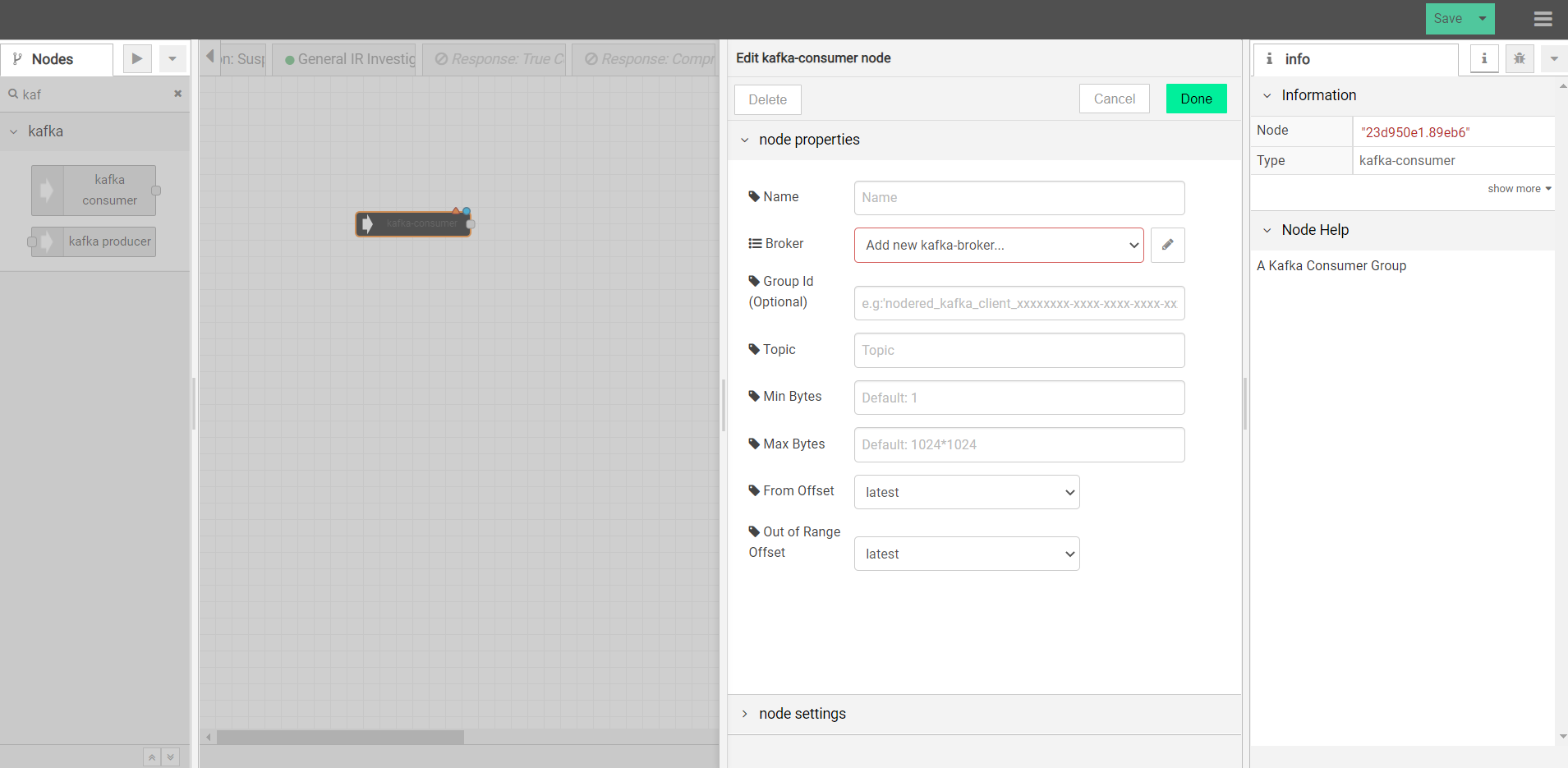

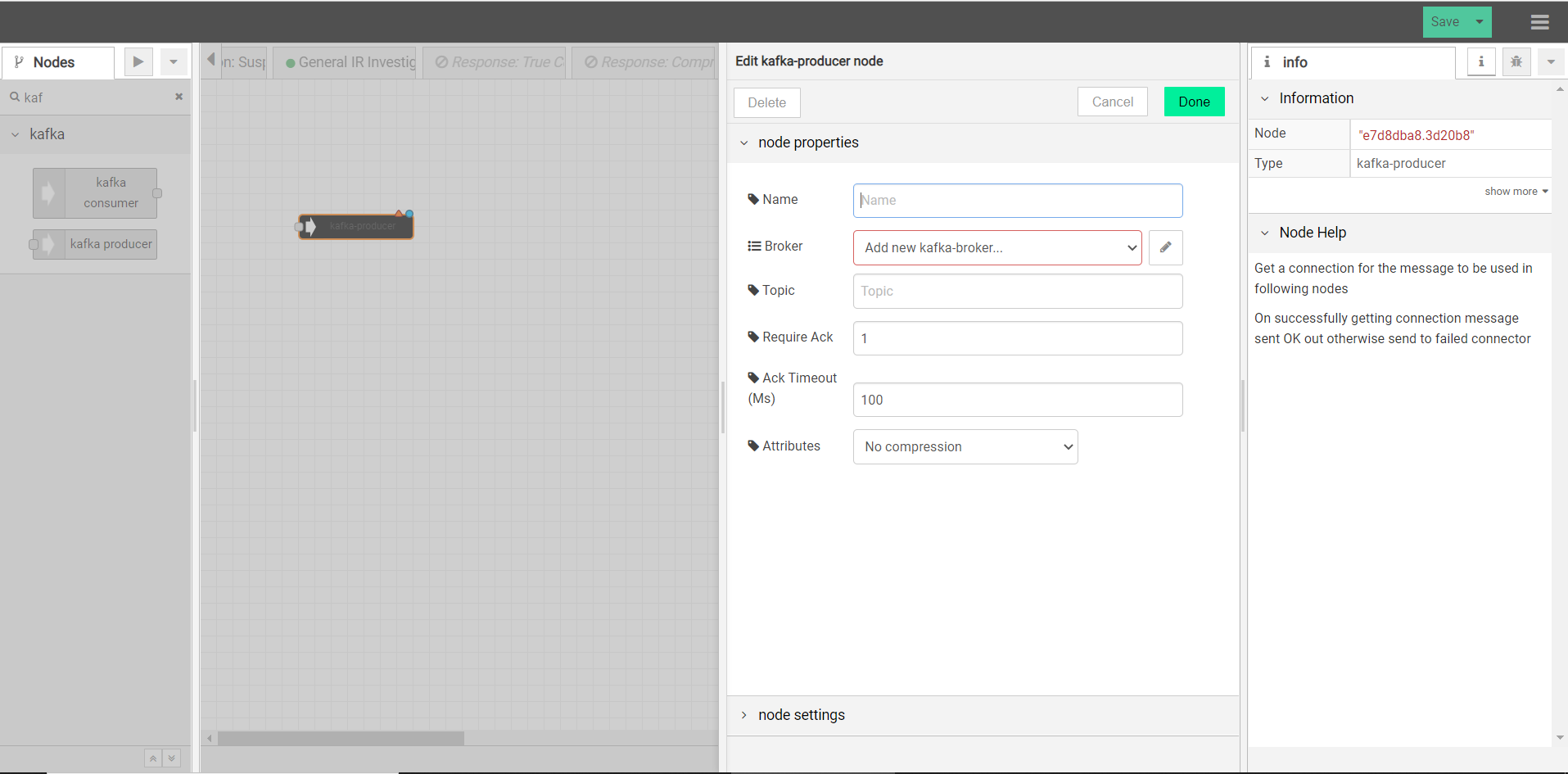

Kafka¶

DTonomy has two Kafka nodes: consumer & producer.

Kafka Consumer node reads stream from specified Kafka Topic.

Kafka Producer node uploads stream to specified Kafka Topic.

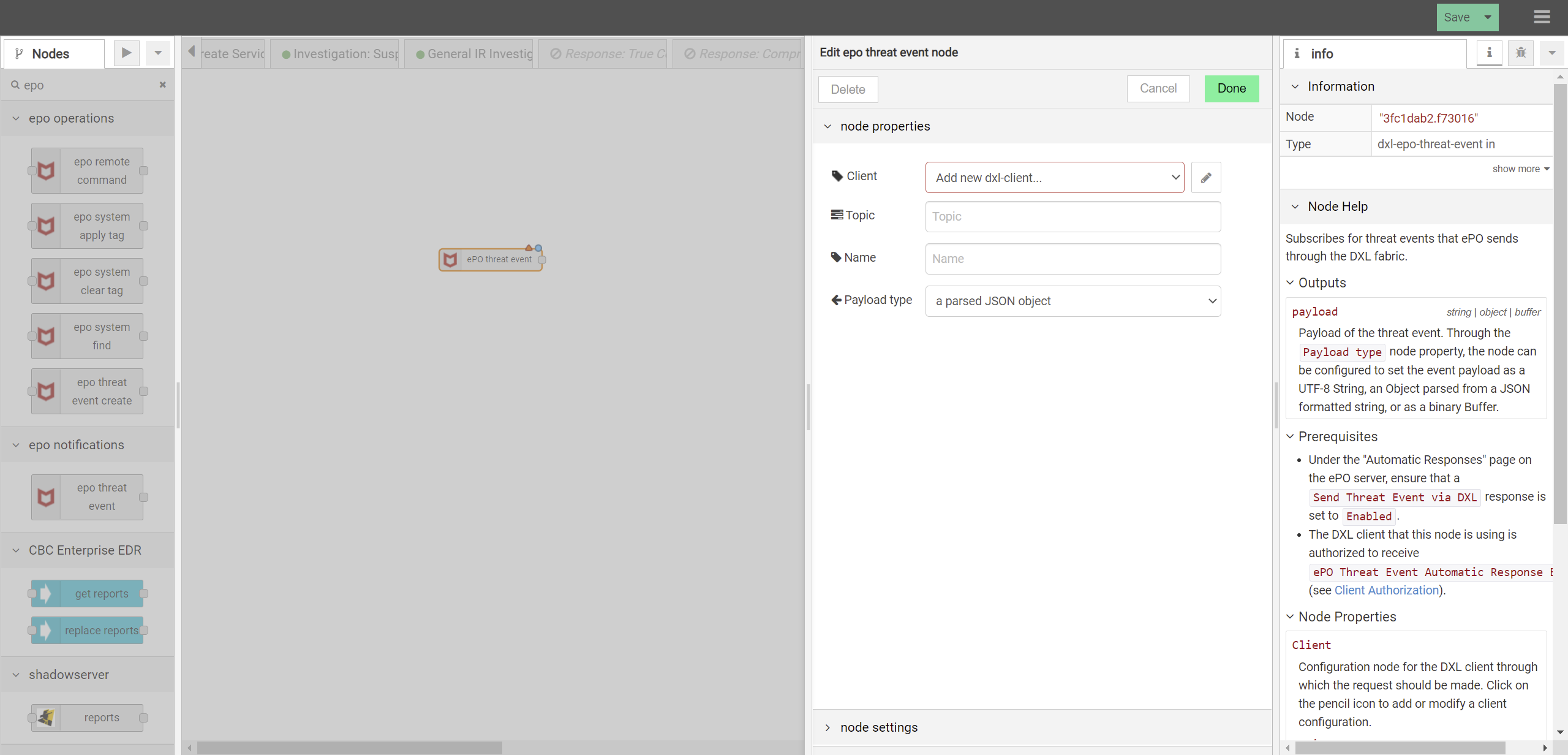

McAfee¶

DTonomy provides a connector that enable you to subscribe for threat events that McAfee ePO Sever sends through the DXL fabric.

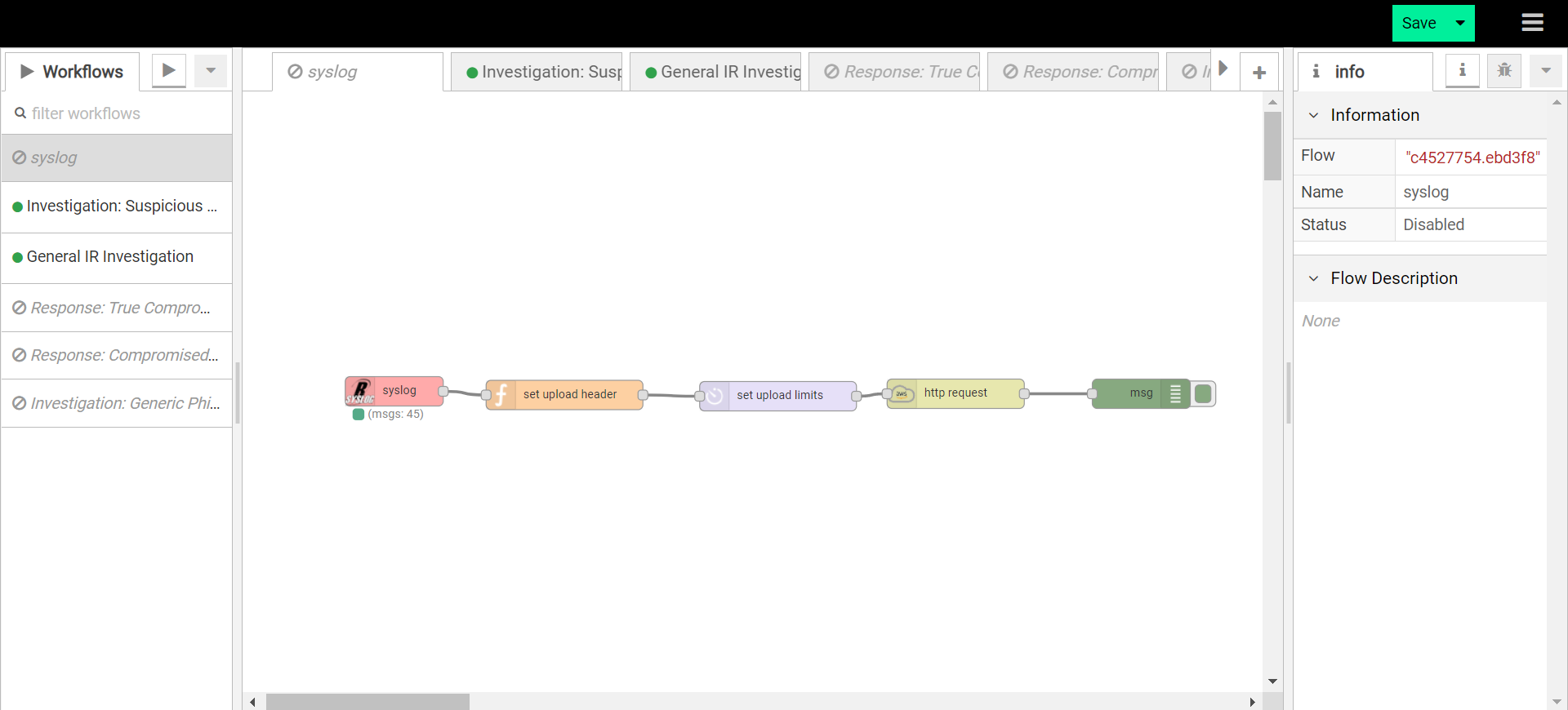

OPNSense¶

To upload OPNSense logs/alerts to DTonomy, DTonomy provides a Syslog connector that you can deploy locally and upload them to DTonomy end point. To connect to Syslog server, you will firstly configure your OpenSense to forward to the Syslog listener.

The local workflow provided by DTonomy takes syslog stream as input and then upload those alerts/logs to DTonomy server.

On DTonomy sever, you can set up a workflow to perform any other following actions such as parser and normalizer.

Palo Alto Networks (PAN-OS)¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure Palo Alto to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure Palo Alto to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Proofpoint Targeted Attack Protection(TAP)¶

Retrieve TAP event from Proofpoint with integrated API

Pulse Connect Secure¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure Pulse to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure Pulse to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Qradar¶

QRadar get offenses within certain range leveraging existing API connector.

Qualys¶

Get scan result with scan reference with existing API connector.

Rapid7 AppSpider¶

Get vulnerabilities report using AppSpider with existing API connector.

Rapid7 Nexpose¶

Get vulnerabilities report using Nexpose with existing API connector.

SCCM¶

Query SCCM for logs or reports

ServiceNow¶

Retrieve ticket from ServiceNow with DTonomy’s existing connector.

SonicWall Firewall¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure SonicWall to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure SonicWall to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Sentry¶

Retrieve issues from Sentry

Shadowserver¶

With built-in connector, you can retrieve threat reports from shadowserver continuously.

Shodan¶

Collect scan results by Shodan.

Signal Science¶

Get Security alerts from signal science

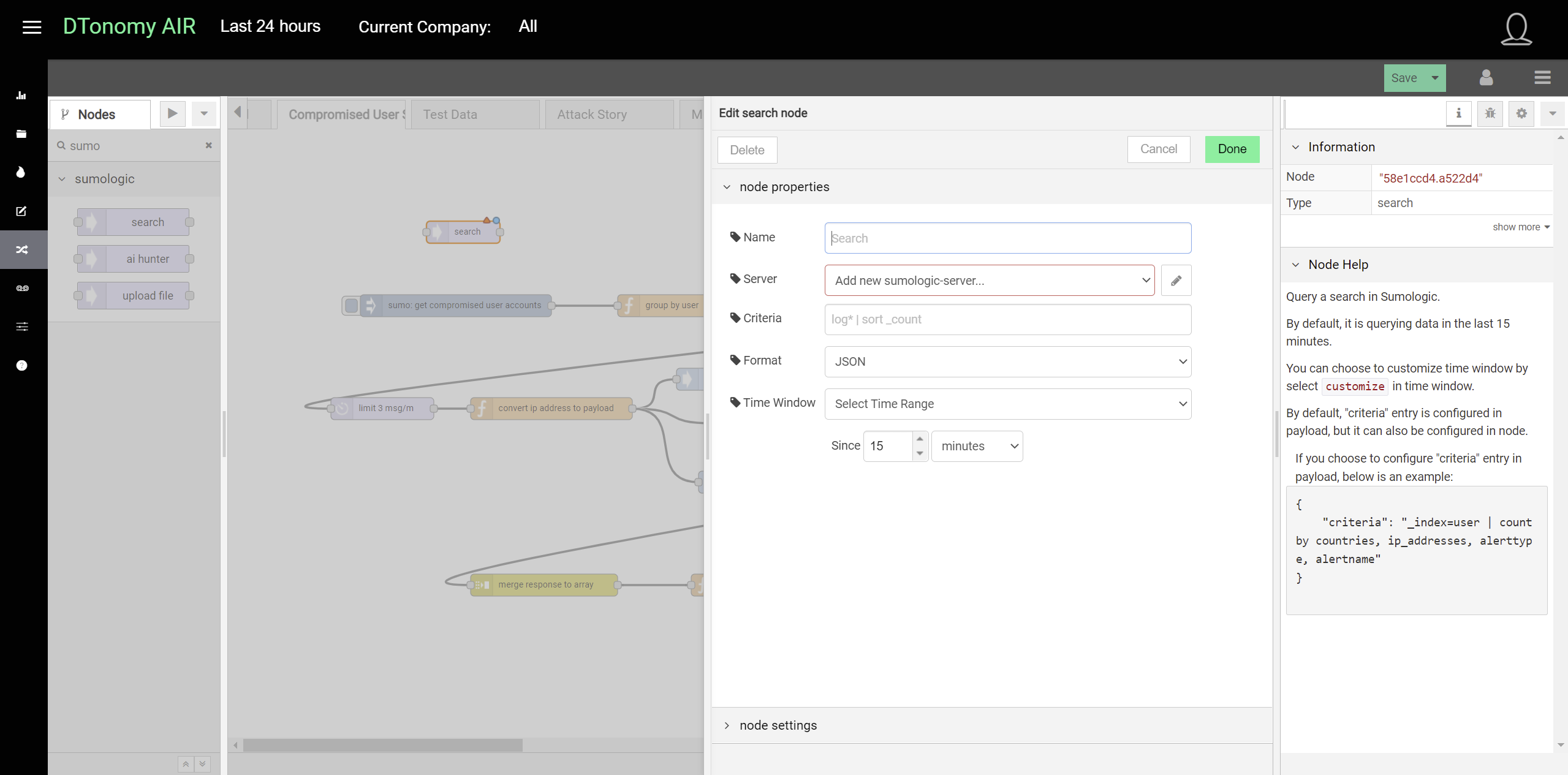

Sumo Logic¶

DTonomy’s built-in Sumo Logic integration enables you to run a query on Sumo Logic periodically and pull data to DTonomy for further correlation and response.

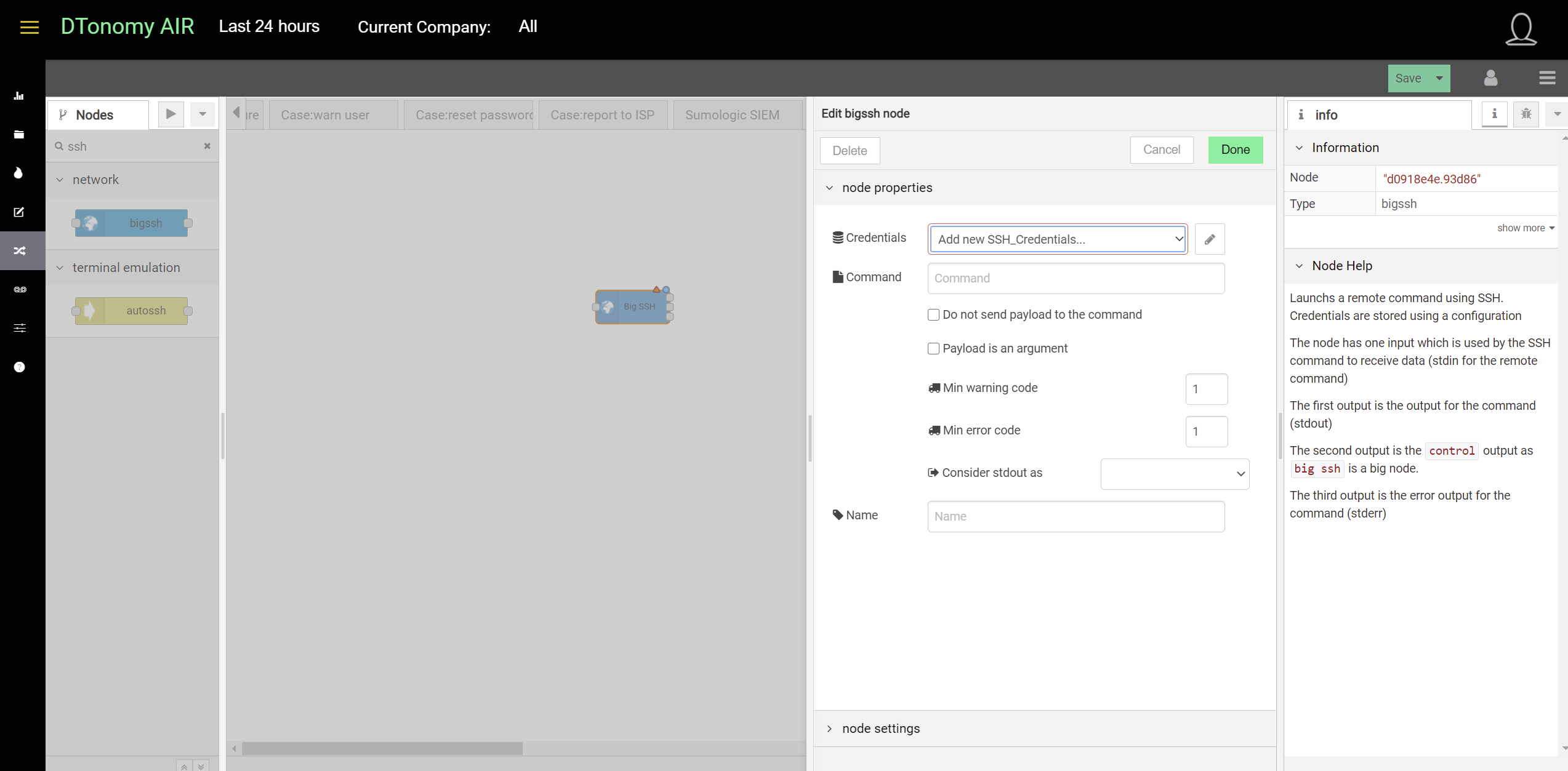

SSH¶

DTonomy’s bigssh node enables you to pull data from your on-premise environment to the cloud where you can correlate and automate.

Trend Micro Deep Security¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure Deep Security to forward Syslog messages to your workflow instance provided by DTonomy. Instructions on how to configure Deep Security to forward syslog is here.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Trend Micro TippingPoint¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure TippingPoint to forward Syslog messages to your workflow instance provided by DTonomy.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Tenable IO¶

Get scan results from tenable IO with existing API.

Tenable SC¶

Get scan results from tenable SC with existing API.

Vectra Cognito Detect¶

- Deploy DTonomy’s workflow instance to your on-premise sever which acts as a Syslog server.

- Configure Vectra Agent to forward Syslog messages to your workflow instance provided by DTonomy.

- Once received, the local Syslog server will upload to DTonomy’s cloud end point directly.

Wazuh¶

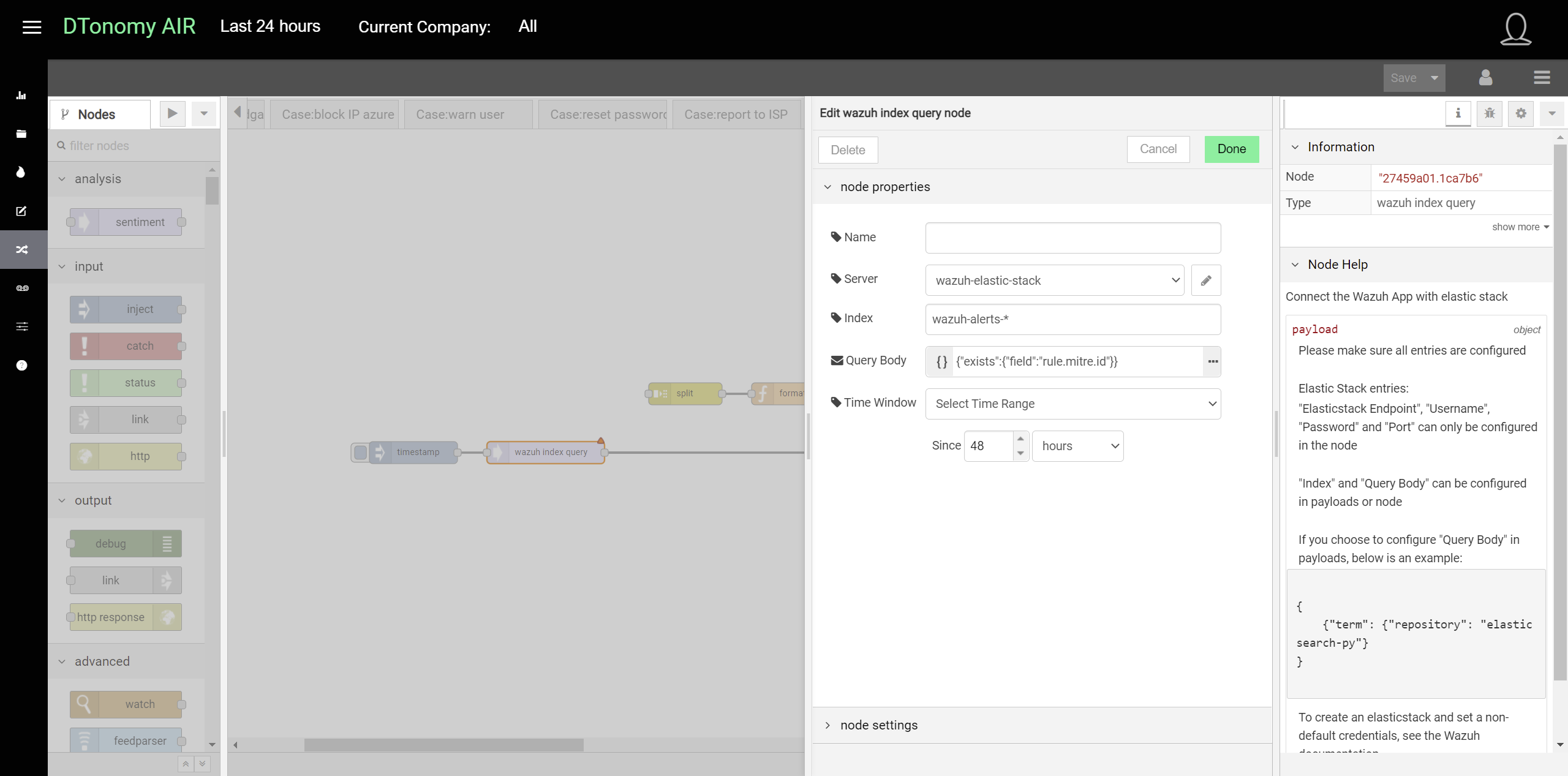

Wazuh is an open-source end point detection application built on top of elastic search. Our built-in integrations enable you to query the detection results from Wazuh periodically by running queries.

Zscaler Cloud Firewall¶

You can configure Zscaler to forward firewall logs to DTonomy’s Syslog server and then forward to DTonomy’s end point. Check out Zscaler’s document for (Adding NSS Feeds for Firewall Logs)

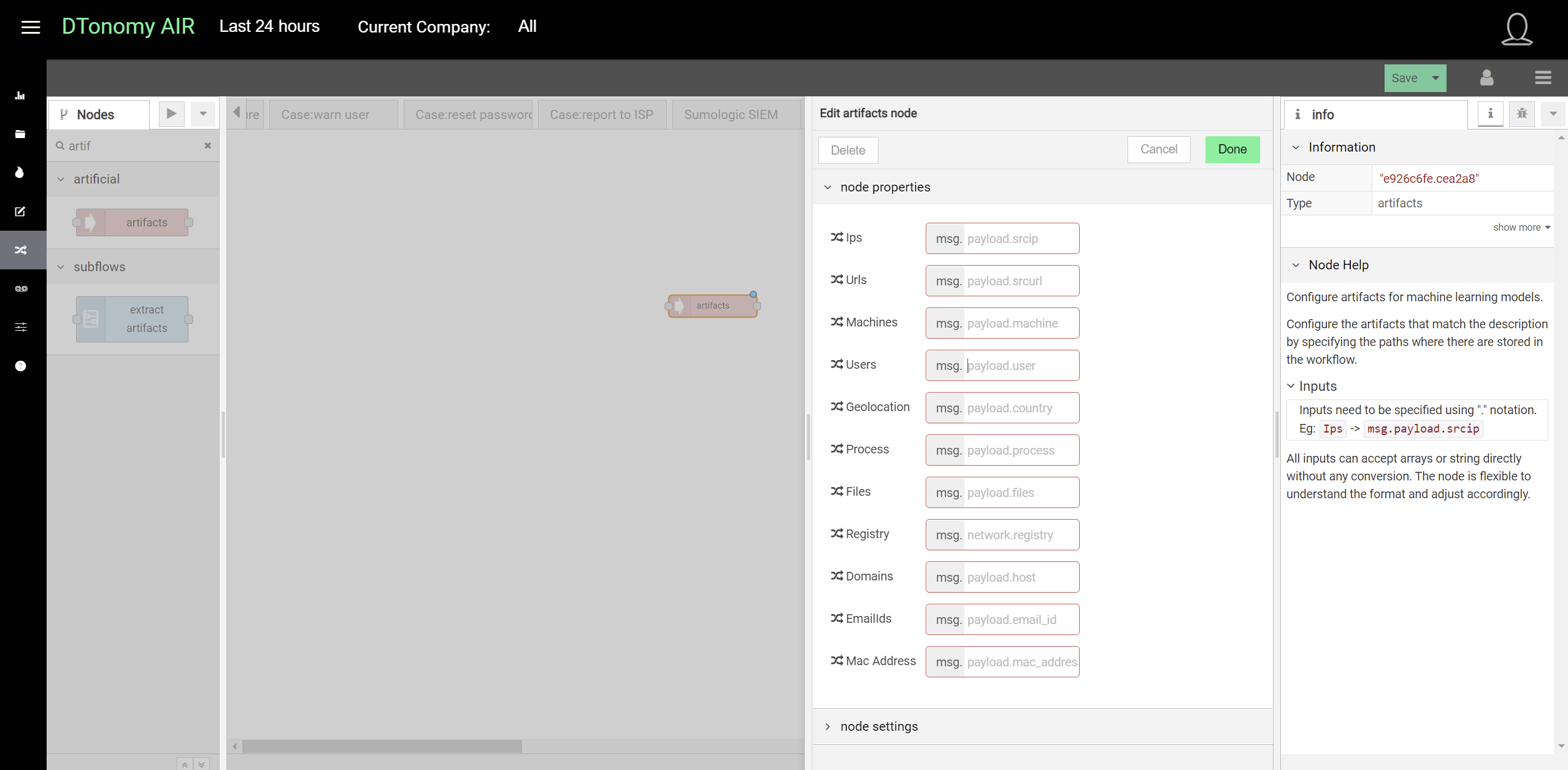

Artifacts Extraction (Normalize)¶

To enable further intelligence enhancement, one important task in security investigation and response is to extract artifacts and normalize data. DTonomy’s standard data structure includes these artifacts

- ips

- urls

- machines

- users

- geolocation

- process

- files

- registry

- domains

- email ids

- mac address

Generic Artifacts Extraction¶

DTonomy provides artifact nodes to enable you to match your detections to common artifacts.

If your detections have a variety of formats, it is recommended to add a function node before the artifacts node to customize the artifacts mapping.